https://github.com/gboysking/openai-chat-session-manager

GitHub - gboysking/openai-chat-session-manager: OpenAI Chat Session Manager is a TypeScript module designed to manage chat sessi

OpenAI Chat Session Manager is a TypeScript module designed to manage chat sessions with an AI model (default : GPT-3.5 Turbo) through the OpenAI API. - GitHub - gboysking/openai-chat-session-manag...

github.com

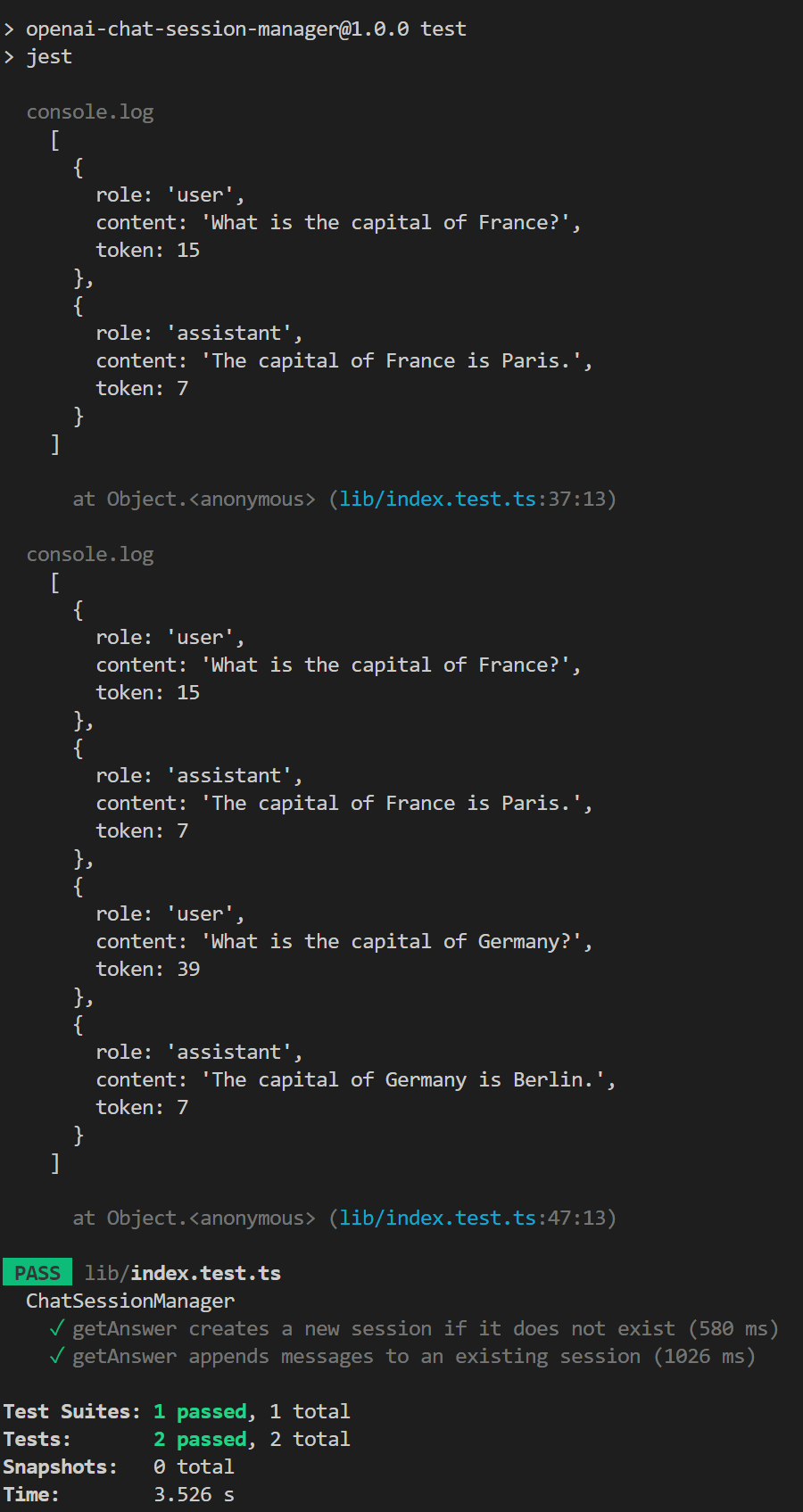

In this blog post, we'll explore how to build a chat session manager using TypeScript and AWS DynamoDB to interact with the OpenAI API and the GPT-3.5 Turbo model. The chat session manager will handle chat session storage and retrieval, allowing seamless interaction with the AI model.

Overview

Our chat session manager will have the following features:

- Communicate with the OpenAI API to send chat messages and receive AI-generated responses

- Store chat histories in AWS DynamoDB

- Support custom session storage implementations

Prerequisites

To follow along with this tutorial, you'll need:

- An OpenAI API key

- Node.js and npm installed on your local machine

- Basic knowledge of TypeScript

Setting up the project

- Create a new directory for your project and navigate to it in your terminal.

- Initialize a new Node.js project by running <pre>npm init</pre> and following the prompts.

- Install the required dependencies:

npm install axios aws-sdk

npm install --save-dev typescript ts-node @types/node

4. Initialize a TypeScript project by running

tsc --init

5. Update your tsconfig.json file to enable the following options:

{

"compilerOptions": {

"module": "CommonJS",

"target": "ES2020",

"esModuleInterop": true,

"declaration": true,

"outDir": "dist",

"types": [

"jest"

]

},

"exclude": [

"node_modules",

"**/*.test.ts"

]

}

6. Create a src directory to store your TypeScript files.

Building the Chat Session Manager

- In the

srcdirectory, create a new file calledChatSessionManager.ts.

- Define the necessary interfaces and abstract classes:

import axios from "axios";

import { ChatSessionDynamoDBTable } from "./ChatSessionDynamoDBTable";

const OPENAI_API_KEY = process.env.OPENAI_API_KEY;

export interface ChatMessage {

role: "user" | "assistant";

content: string;

token?: number;

}

export interface ChatData {

sessionId: string;

created: number;

lastUpdate?: number;

totalTokens: number;

messages: ChatMessage[];

}

export abstract class ChatSession {

abstract putItem(sessionId: string, data: Omit<ChatData, 'sessionId'>): Promise<void>;

abstract getItem(sessionId: string): Promise<ChatData | null>;

abstract deleteItem(sessionId: string): Promise<void>;

}

3. Implement the ChatSessionManager class:

export class ChatSessionManager {

private session: ChatSession;

private max_tokens: number;

private temperature: number;

constructor(options: ChatSessionManagerOptions) {

if (options.session == null) {

this.session = new ChatSessionDynamoDBTable({ table: "chat" });

}

this.max_tokens = options.max_tokens ? options.max_tokens : 50;

this.temperature = options.temperature ? options.temperature : 1.0;

}

async getAnswer(sessionId: string, prompt: string, model: string = "gpt-3.5-turbo"): Promise<ChatMessage[]> {

// (Implementation details)

}

}

4. Implement the logic for the getAnswer() method:

// (Inside the getAnswer() method)

let history: ChatData | null = await this.session.getItem(sessionId);

if (history == null) {

history = { sessionId: sessionId,created: new Date().getTime(),

messages: [],

totalTokens: 0

};

} else {

history.lastUpdate = new Date().getTime();

}

let userMessage: ChatMessage = { role: "user", content: prompt };

const apiUrl = 'https://api.openai.com/v1/chat/completions';

const headers = {

'Content-Type': 'application/json',

'Authorization': Bearer ${OPENAI_API_KEY},

};

const messagesWithoutTokens = history.messages.map((msg) => ({ role: msg.role, content: msg.content }));

const data = {

model: model,

messages: [...messagesWithoutTokens, userMessage],

max_tokens: this.max_tokens,

n: 1,

stop: null,

temperature: this.temperature,

};

try {

const response = await axios.post(apiUrl, data, { headers });

const content = response.data.choices[0].message.content;

const promptTokens = response.data.usage.prompt_tokens;

const completionTokens = response.data.usage.completion_tokens;

const totalTokens = response.data.usage.total_tokens;

let assistantMessage: ChatMessage = { role: "assistant", content: content, token: completionTokens };

userMessage.token = promptTokens;

history.messages.push(userMessage);

history.messages.push(assistantMessage);

history.totalTokens += totalTokens;

await this.session.putItem(sessionId, history);

return history.messages;

} catch (error) {

console.error('Error while fetching data from OpenAI API:', error);

throw error;

}

Using the Chat Session Manager

- Create a new file called

app.tsin yoursrcdirectory.

- Import the

ChatSessionManagerclass and create an instance:

import { ChatSessionManager } from "./ChatSessionManager";

const manager = new ChatSessionManager({});

3. Use the getAnswer() method to send a message and receive a response:

(async () => {

const sessionId = "test-session";

const prompt = "Hello, how are you?";

try {

const messages = await manager.getAnswer(sessionId, prompt);

console.log(messages);

} catch (error) {

console.error("Error:", error);

}

})();

4. Run your app.ts file using ts-node:

npx ts-node src/app.ts

Environment Variable Configuration

To configure the environment variable for the OpenAI API key, create a .env file in your project's root directory and add the following line:

OPENAI_API_KEY=your_openai_api_key_here

Make sure to replace your_openai_api_key_here with your actual OpenAI API key.

Conclusion

In this tutorial, we've built a simple chat session manager using TypeScript and AWS DynamoDB to interact with the OpenAI API and the GPT-3.5 Turbo model. This chat session manager can store and retrieve chat histories, allowing for seamless interactions with the AI model.

This article was written with the help of ChatGPT.

'ChatGPT > AWS Serverless' 카테고리의 다른 글

| [AWS][DynamoDB] DynamoDB에서의 분삭 Lock 소개 - 2 (0) | 2023.06.10 |

|---|---|

| [AWS][DynamoDB] DynamoDB에서의 분산 Lock 소개 - 1 (0) | 2023.06.10 |

| [Serverless][DynamoDB] Simple Device Data Storage Application (0) | 2023.04.17 |

| [Serverless][DynamoDB] Time Series Statistics Manager (0) | 2023.04.13 |

| [Serverless][MySQL] Express Session Store (0) | 2023.04.12 |